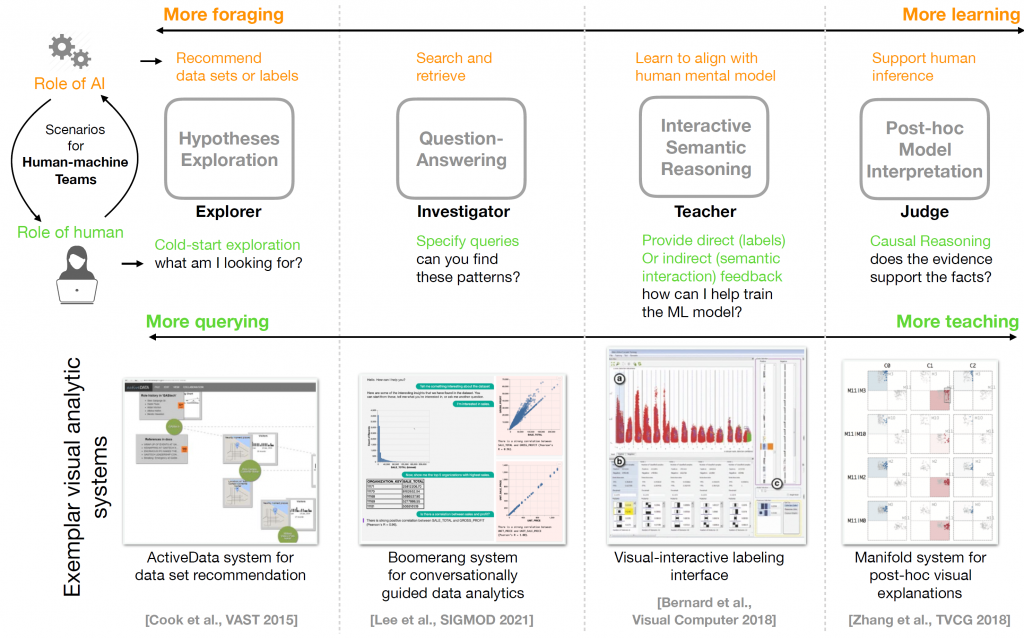

“Detect the expected, discover the unexpected” was the founding principle of the field of visual analytics. This mantra implies that human stakeholders, like a domain expert or data analyst, could leverage visual analytics techniques to seek answers to known unknowns and discover unknown unknowns in the course of the data sensemaking process. In this paper, argue that in the era of AI-driven automation, we need to recalibrate the roles of humans and machines (e.g., a machine learning model) as teammates. We posit that by realizing human-machine teams as a stakeholder unit, we can better achieve the best of both worlds: automation transparency and human reasoning efficacy. However, this also increases the burden on analysts and domain experts towards performing more cognitively demanding tasks than what they are used to. In this paper, we reflect on the complementary roles in a human-machine team through the lens of cognitive psychology and map them to existing and emerging research in the visual analytics community. We discuss open questions and challenges around the nature of human agency and analyze the shared responsibilities in human-machine teams.

Related publication:

- John Wenskovitch, Corey Fallon, Kate Miller, and Aritra Dasgupta. “Beyond Visual Analytics: Human-Machine Teaming for AI-Driven Sensemaking,” in Proceedings of the IEEE VIS Workshop on Trust and Expertise in Visual Analytics (TREX). TREX’219. 2021, pp. 40-44. DOI: 10.1109/TREX53765.2021.00012.

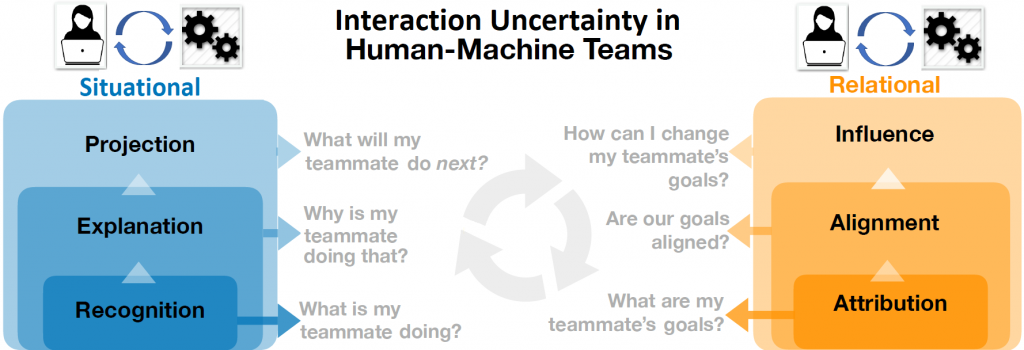

With the increasing use and adoption of artificial intelligence, the reliability of modern data systems will be driven by a tighter teaming between human experts and intelligent machine teammates. As in the case of human-human teams, the success of human-machine teams will also rely on clear communication about mutual goals and actions. In this paper, we combine related literature from cognitive psychology, human-machine teaming, uncertainty in data analysis, and multi-agent systems to propose a new form of uncertainty, interaction uncertainty, for characterizing bidirectional communication in human-machine teams. We map the causes and effects of interaction uncertainty and outline potential ways to mitigate uncertainty for mutual trust in a high-consequence real-world scenario.

Related publication:

- John Wenskovitch, Corey Fallon, Kate Miller, and Aritra Dasgupta. “Characterizing Interaction Uncertainty in Human-Machine Teams,” in 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS). 2024, pp. 1-6. DOI: 10.1109/ICHMS59971.2024.10555605.